New

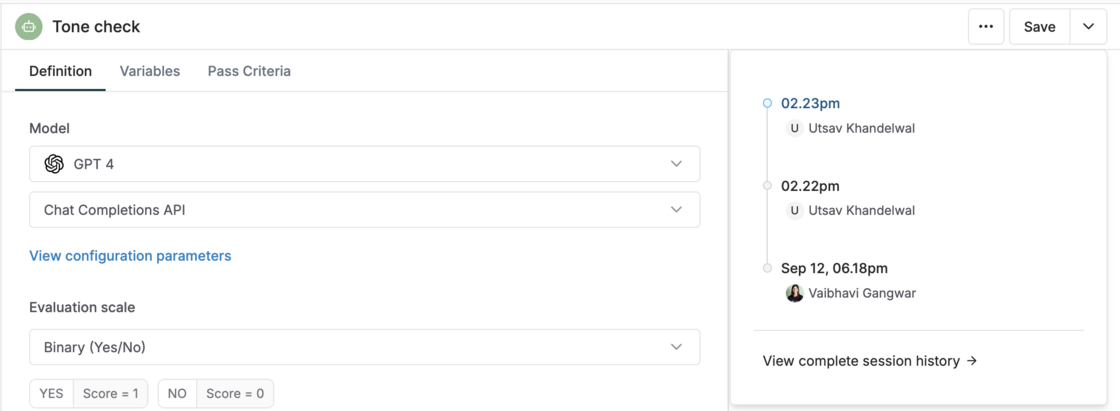

Similar to Prompts and No-code agents, we’ve introduced Session history to Evaluators, giving teams a complete record of all updates made to any evaluator (AI, statistical, human, etc).

Each session captures every change -- from LLM-as-a-judge prompt edits and pass criteria updates to code changes in Programmatic evals -- creating a clear, chronological view of how an evaluator evolves over time. This provides greater transparency and traceability when collaboratively building and experimenting with evals.