New

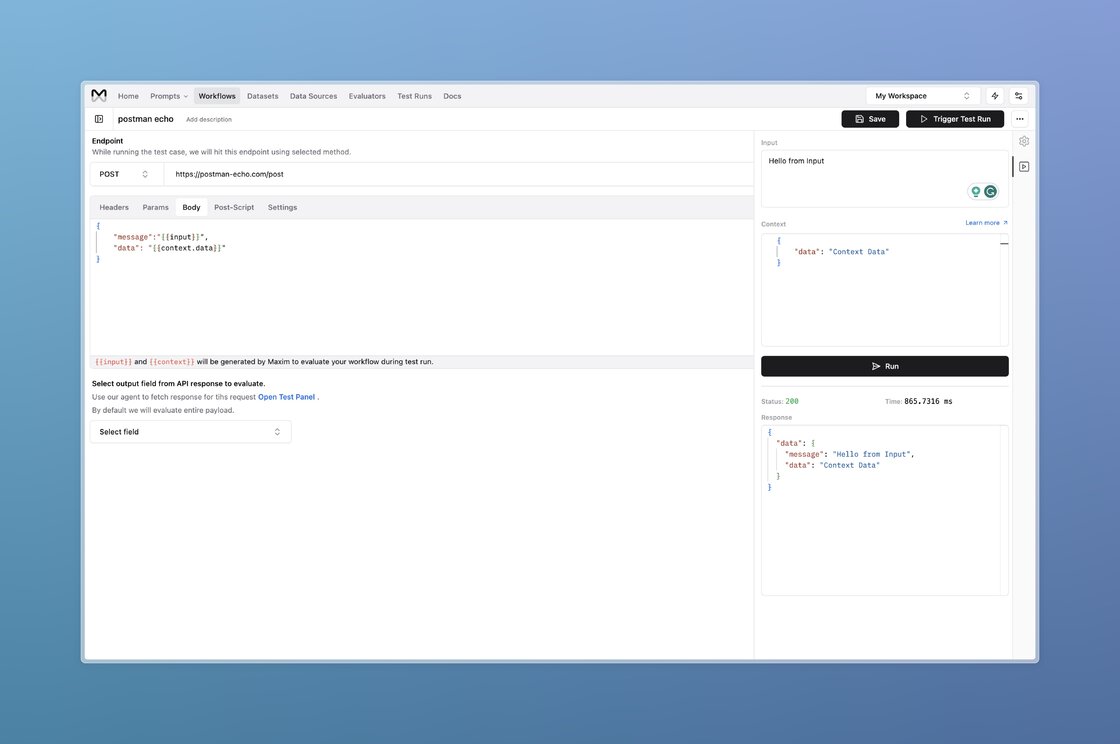

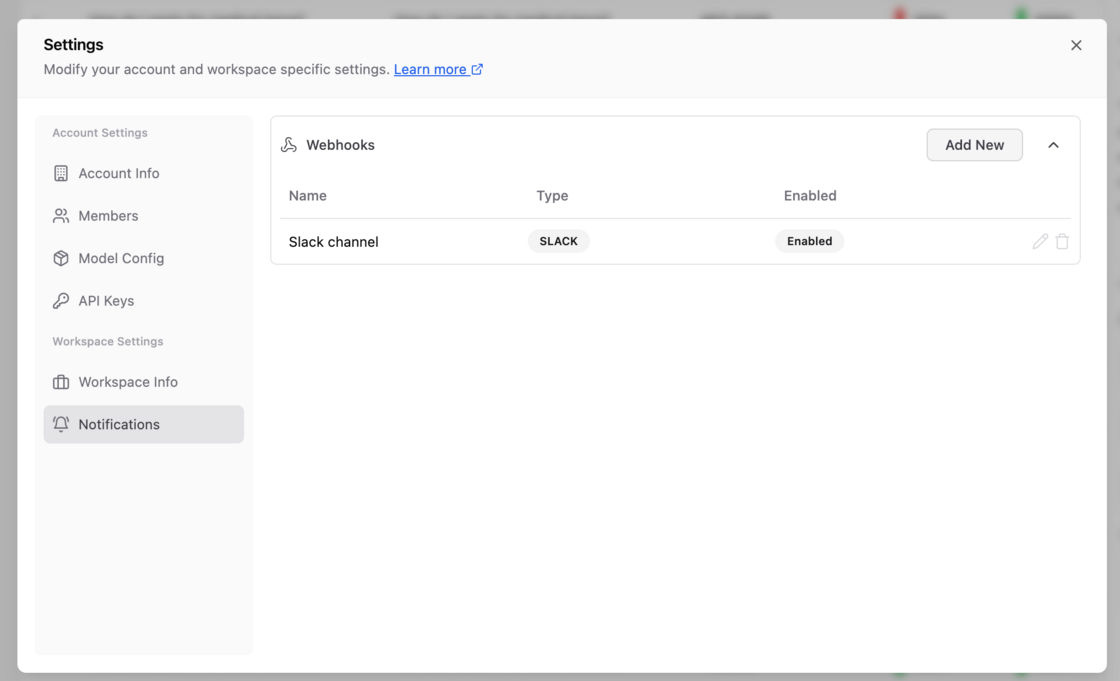

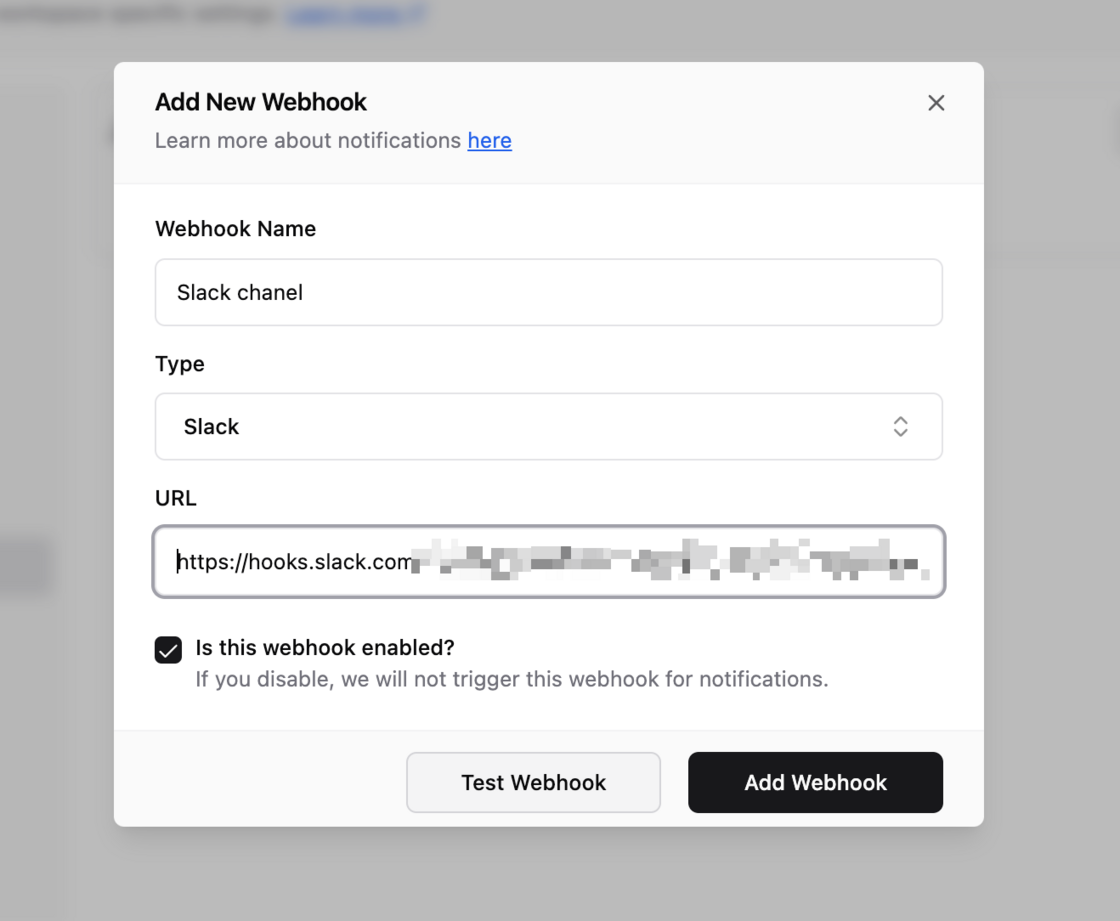

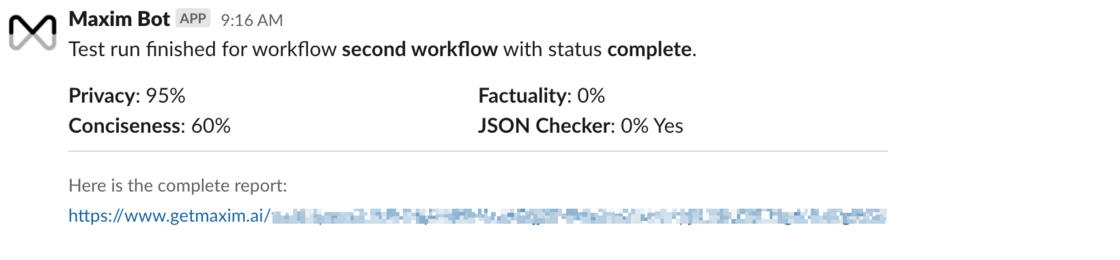

- We now support adding custom evaluators (API-based evaluators) using webhooks.

- You can expose the configuration of your evaluator, i.e., endpoint, method, and payload requirements, and we call this webhook while running test cases.

Improvement

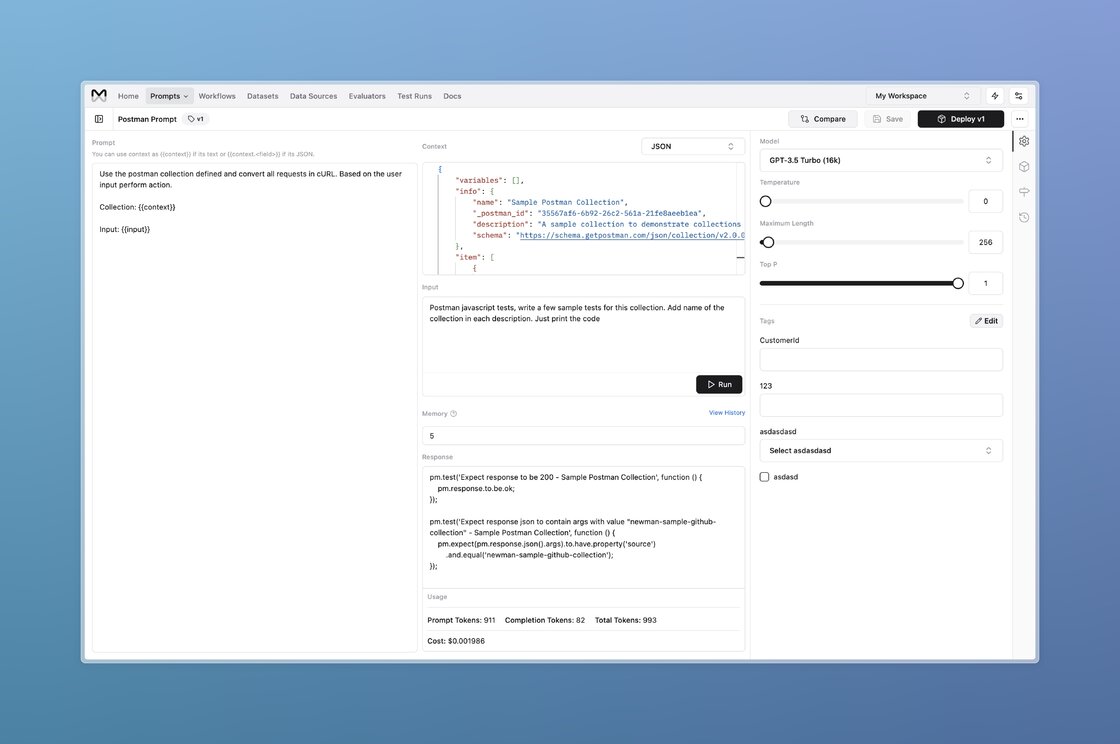

- Custom AI Evaluators now support the

contextfield. This will help you evaluate a test set entry accurately.

Fix

- We fixed our dataset import from CSV to consider the

contextfield.